Locally testing API Gateway Docker based Lambdas

AWS Lambda is one of those technologies that makes the distinction between infrastructure and application code quite blurry. There are many frameworks out there, some of them quite popular, such as AWS Amplify and the Serverless Framework, which will allow you to define your Lambda, your application code, and will provide tools that will package and provision, and then deploy those Lambdas (using CloudFormation under the hood). They also provide tools to locally run the functions for local testing, which is particularly useful if they are invoked using technologies such as API Gateway. Sometimes, however, especially if your organisation has adopted other Infrastructure as Code tools such as Terraform, you might want to just provision a function with simpler IaC tools, and keep the application deployment steps separate. Let us explore an alternative method to still be able to run and test API Gateway based Lambdas locally without the need to bring in big frameworks such as the ones mentioned earlier.

We will make some assumptions before moving forward:

- Our Lambda will be designed to be invoked by AWS API Gateway, using the Proxy Integration.

- Our Lambda will be Docker based.

- Our Lambda has already been provisioned by another tool, so our only concern here is how to locally build it and run it the same way any other client would do via API Gateway.

Lambda code and Docker image

Let us follow the AWS Documentation and write a very simple function in Python which we can use throughout this project.

The Python code for our handler will be straightforward:

import json

def handler(event, context):

return {

"isBase64Encoded": False,

"statusCode": 200,

"body": json.dumps(event),

"headers": {"content-type": "application/json"},

}This handler will simply return a 200 response code with the Lambda event as its body, in JSON format.

In order to package this function so that the AWS runtime can execute it, we will make use of the provided AWS base Docker image, and add our code to it (at the time of writing this article Python’s latest version was 3.12). The dockerfile below assumes that our code is written on a file named lambda_function.py and that we have a requirements.txt file with our dependencies on it (in our case the file can be empty).

FROM public.ecr.aws/lambda/python:3.12

# Copy requirements.txt

COPY requirements.txt ${LAMBDA_TASK_ROOT}

# Install the specified packages

RUN pip install -r requirements.txt

# Copy function code

COPY lambda_function.py ${LAMBDA_TASK_ROOT}

# Set the CMD to your handler (could also be done as a parameter override outside of the Dockerfile)

CMD [ "lambda_function.handler" ]Running and testing the Lambda function

In order to test that this all works as expected, we need to build that Docker image and run it:

docker build -t docker-image:test .

docker run -p 9000:8080 docker-image:testThe above commands will do exactly that, and map the container port 8080 to the local port 9000.

As per the documentation, in order to test this function and see an HTTP response, it is not sufficient to just make an HTTP request to http://localhost:9000. If we were to do this, we would simply get back a 404 response. After all, our function could be triggered in the real world not just by HTTP requests but by many other events, such as a change to an S3 bucket, or a message being pulled from an SQS queue.

Behind the scenes, any invocation of a Lambda function eventually happens via an API call. When we make an HTTP request that is eventually served by a Lambda function, what is happening is that some other service (for example AWS API Gateway, or an AWS ALB) transforms that HTTP request into an event, then that event is passed to the Lambda Invoke method as a parameter, and the Lambda response gets mapped back to an HTTP response.

The AWS provided base Docker images already come with something called the Runtime Interface Client which takes care of acting as that proxy for you, allowing the invocation of the function via an HTTP API call.

In order to get our local Lambda to reply with a response, this is what we need to do instead:

curl "http://localhost:9000/2015-03-31/functions/function/invocations" -d '{}'

This will invoke the Lambda with an empty event. If our Lambda is to be behind AWS API Gateway using a Proxy Integration, the real event it would receive would look like this:

{

"request_uri": "/",

"request_headers": {

"user-agent": "curl/8.1.2",

"content-type": "application/json",

"accept": "*/*",

"host": "localhost:8000"

},

"request_method": "GET",

"request_uri_args": {}

}

In some cases testing our Lambda locally by carefully crafting curl commands with JSON payloads might be a good option, but sometimes it is necessary to be able to locally hit our Lambda just like we would do if we had the AWS API Gateway Proxy Integration in place. A good example of this might be if we want to test locally how our Lambda would interact with other services we are also running locally, such as a web browser making a GET HTTP request. This is where big footprint frameworks come in handy, since they have those tools built in.

Kong API Gateway to the rescue

An alternative way to gain the same behaviour we would get with frameworks such as Amplify or the Serverless Framework when it comes to testing Lambdas locally is to make use of an open source API Gateway tool called Kong. Kong is a big API Gateway product and offers many features, but in a nutshell what it does is take an incoming HTTP Request, optionally transform it, send it to a downstream service, optionally transform the response, and send that back to the client. One of the many downstream services Kong supports out of the box through a plugin are AWS Lambda functions. One could argue that using something like Kong just to test our Lambda is no different than going the Framework route, however, there are a couple of things I find particularly relevant here:

- Kong can be run via Docker, which we already need to package and run our Lambda. This means we do not have to install any new tool in our local setup.

- This solution allows us to keep our Lambda setup small and simple, and we are not forced to follow any Framework ways of organising our source code.

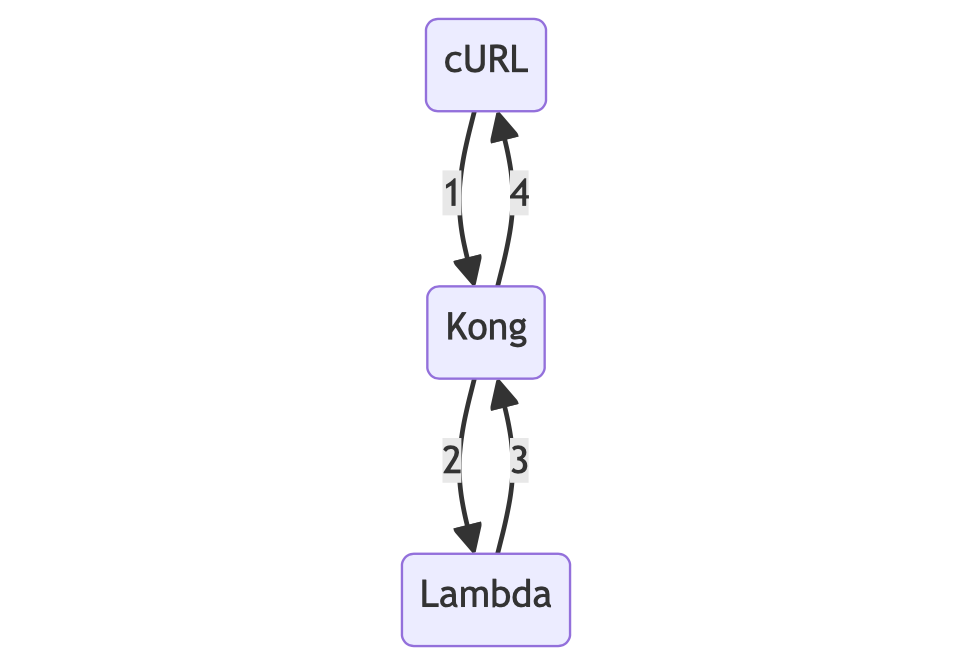

So our final setup is going to look like this:

In order for this to work, we need to configure Kong to proxy HTTP requests to our Lambda. We can do this by using a declarative configuration that uses the aws-lambda plugin on the / route.

We can achieve this using this kong.yml configuration file:

_format_version: "3.0"

_transform: true

routes:

- name: lambda

paths: [ "/" ]

plugins:

- route: lambda

name: aws-lambda

config:

aws_region: eu-west-1

aws_key: DUMMY_KEY

aws_secret: DUMMY_SECRET

function_name: function

host: lambda

port: 8080

disable_https: true

forward_request_body: true

forward_request_headers: true

forward_request_method: true

forward_request_uri: true

is_proxy_integration: trueA few things worth mentioning:

- The

aws_keyandaws_secretare mandatory for the plugin to work, however we do not need to put any real secrets in there, since the invocation will happen locally. function_nameshould stay hardcoded asfunction, as this is the name the Runtime Interface Client uses by default.- The

hostandportvalues there should point to your local docker container running the Lambda function. In our case we uselambdaand8080as we will run all this solution in a single Docker Compose setup where the Lambda runs in a container namedlambda. - We need to set

disable_httpstotrueas our Lambda container is not able to handle SSL. - The rest of the configuration options can be tweaked depending on our specific needs. They are all documented in the Kong website. The values shown here will work for an AWS Lambda Proxy Integration setup using AWS API Gateway, but the Kong plugin supports other types of integrations.

Putting it all together

So far we have built a Docker based Lambda function and we are able to run it locally. We have also seen how to configure Kong API Gateway to proxy HTTP requests to that function. We will now look at what a Docker Compose setup might look like to run it all in a single project and command.

The full source code for this can be found in brafales/docker-lambda-kong. I recommend checking it out to see the whole project structure.

We will assume we have the following folders in our root:

lambda: here we will store the Lambda function source code and its Dockerfile.kong: here we will store the declarative configuration for Kong which will allow us to set it up as a proxy for our function.

And then in the root we can have our docker-compose.yml file:

services:

lambda:

build:

context: lambda

container_name: lambda

networks:

- lambda-example

kong:

image: kong:latest

container_name: kong

ports:

- "8000:8000"

environment:

KONG_DATABASE: off

KONG_DECLARATIVE_CONFIG: /usr/local/kong/declarative/kong.yml

volumes:

- ./kong:/usr/local/kong/declarative

networks:

- lambda-example

networks:

lambda-example:This file does the following:

- Creates a docker network called

lambda-example. This is optional since the default network created by compose would work equally well. - It defines a Docker container named

lambdaand instructs compose to build it using the contents of thelambdafolder. - It defines a Docker container named

kong, using the Docker imagekong:latest, and mapping ourkongfolder to the container path/usr/local/kong/declarative. This will allow the container to read our declarative config file, which we set as an environment variableKONG_DECLARATIVE_CONFIG. We also setKONG_DATABASEtooffto instruct Kong not to search for a database to read its config from, and finally map the container port8000to our localhost port8000.

With all this in place, we can now simply run the following command to spin it all up:

docker compose up

Once all is up and running, we can now reach our Lambda function using curl or any other HTTP client like we would normally do if it was deployed to AWS behind an API Gateway:

➜ curl -s localhost:8000 | jq .

{

"request_method": "GET",

"request_body": "",

"request_body_args": {},

"request_uri": "/",

"request_headers": {

"user-agent": "curl/8.1.2",

"host": "localhost:8000",

"accept": "*/*"

},

"request_body_base64": true,

"request_uri_args": {}

}

➜ curl -s -X POST localhost:8000/ | jq .

{

"request_method": "POST",

"request_body": "",

"request_body_args": {},

"request_uri": "/",

"request_headers": {

"user-agent": "curl/8.1.2",

"host": "localhost:8000",

"accept": "*/*"

},

"request_body_base64": true,

"request_uri_args": {}

}

➜ curl -s localhost:8000/?foo=bar | jq .

{

"request_method": "GET",

"request_body": "",

"request_body_args": {},

"request_uri": "/?foo=bar",

"request_headers": {

"user-agent": "curl/8.1.2",

"host": "localhost:8000",

"accept": "*/*"

},

"request_body_base64": true,

"request_uri_args": {

"foo": "bar"

}

}